Article-Journal

In Journal of Artificial Intelligence Research (JAIR), 2023

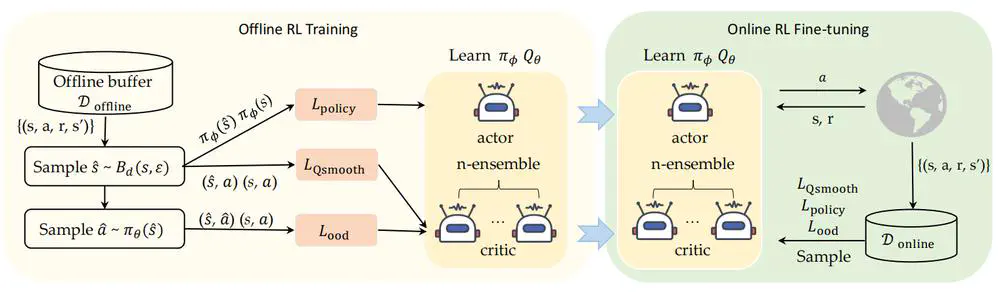

We propose the Robust Offline-to-Online (RO2O) algorithm, designed to enhance offline policies through uncertainty and smoothness, and to mitigate the performance drop in online adaptation.

In Transactions on Machine Learning Research (TMLR), 2025

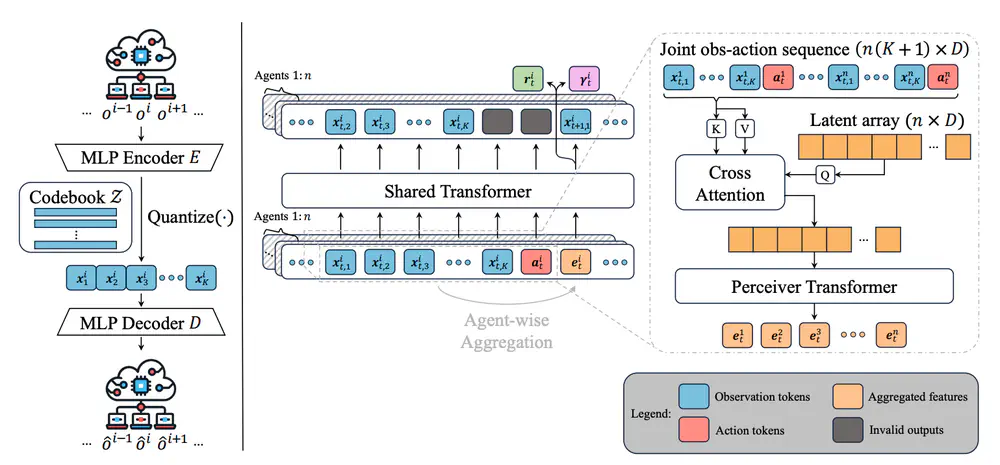

we propose a novel world model for MARL that learns decentralized local dynamics for scalability, combined with a centralized representation aggregation from all agents.

In Journal of the American Statistical Association (JASA), 2025

This paper proposes an offline Wasserstein-based approach to estimate the joint distribution of multivariate discounted cumulative rewards, establishes finite sample error bounds in the batch setting, and demonstrates its superior performance through extensive numerical studies.