Image credit: Chenjia Bai

Image credit: Chenjia BaiAbstract

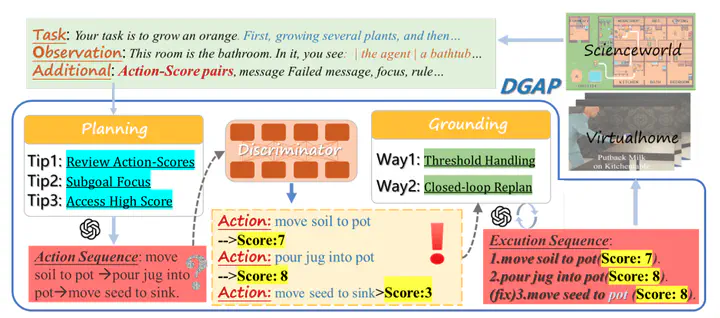

Large Language Models (LLMs) have showcased remarkable reasoning capabilities in various domains, yet face challenges in complex embodied tasks due to the need for a coherent long-term policy and context-sensitive environmental understanding. Previous work performed LLM refinement relying on outcome-supervised feedback, which can be costly and ineffective. In this work, we introduce a novel framework, Discriminator-Guided Action Optimization (DGAP), for facilitating the optimization of LLM action plans via step-wise signals. Specifically, we employ a limited set of demonstrations to enable the discriminator to learn a score function, which assesses the alignment between LLM-generated actions and the underlying optimal ones at every step. Based on the discriminator, LLMs are prompted to generate actions that maximize the score, utilizing historical action-score pair trajectories as guidance. Under mild conditions, DGAP resembles critic-regularized optimization and has been demonstrated to achieve a stronger policy than the LLM planner. In experiments across different LLMs (GPT-4, Llama3-70B) in ScienceWorld and VirtualHome, our method achieves superior performance and better efficiency than previous methods.