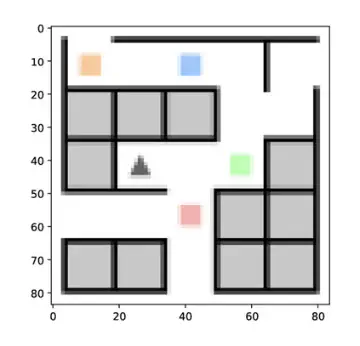

Image credit: Chenjia Bai

Image credit: Chenjia BaiAbstract

In the literature of reinforcement learning (RL), off-policy evaluation is mainly focused on estimating a value of a target policy given the pre-collected data generated by some behavior policy. Motivated by the recent success of distributional RL in many practical applications, we study the distributional off-policy evaluation problem in the batch setting when the reward is multi-variate. We propose an offline Wasserstein-based approach to simultaneously estimate the joint distribution of a multivariate discounted cumulative reward given any initial state-action pair in the setting of an infinite-horizon Markov decision process. Finite sample error bound for the proposed estimator with respect to a modified Wasserstein metric is established in terms of both the number of trajectories and the number of decision points on each trajectory in the batch data. Extensive numerical studies are conducted to demonstrate the superior performance of our proposed method.