Obtaining Accurate Estimated Action Values in Categorical Distributional Reinforcement Learning.

Image credit: Chenjia Bai

Image credit: Chenjia BaiAbstract

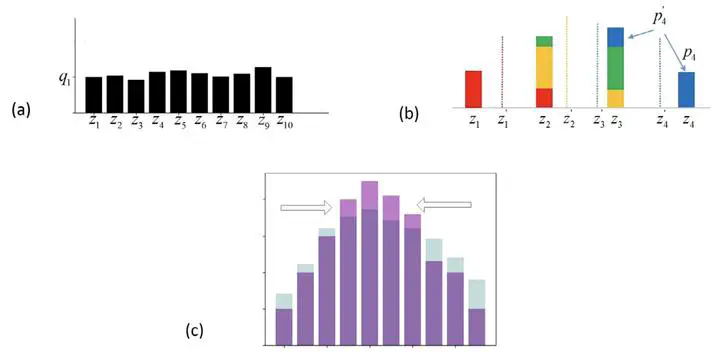

Categorical Distributional Reinforcement Learning (CDRL) uses a categorical distribution with evenly spaced outcomes to model the entire distribution of returns and produces state-of-the-art empirical performance. However, using inappropriate bounds with CDRL may generate inaccurate estimated action values, which affect the policy update step and the final performance. In CDRL, the bounds of the distribution indicate the range of the action values that the agent can obtain in one task, without considering the policy’s performance and state–action pairs. The action values that the agent obtains are often far from the bounds, and this reduces the accuracy of the estimated action values. This paper describes a method of obtaining more accurate estimated action values for CDRL using adaptive bounds. This approach enables the bounds of the distribution to be adjusted automatically based on the policy and state–action pairs. To achieve this, we save the weights of the critic network over a fixed number of time steps, and then apply a bootstrapping method. In this way, we can obtain confidence intervals for the upper and lower bound, and then use the upper and lower bound of these intervals as the new bounds of the distribution. The new bounds are more appropriate for the agent and provide a more accurate estimated action value. To further correct the estimated action values, a distributional target policy is proposed as a smoothing method. Experiments show that our method outperforms many state-of-the-art methods on the OpenAI gym tasks.