Image credit: Chenjia Bai

Image credit: Chenjia BaiAbstract

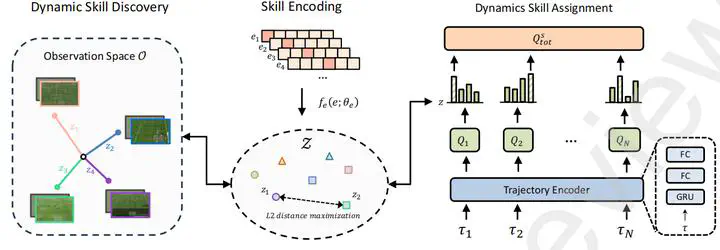

In Multi-Agent Reinforcement Learning (MARL), acquiring suitable behaviors for distinct agents in different scenarios is crucial to enhance the collaborative efficacy and adaptability of multi-agent systems. Existing methods address this challenge through role-based and hierarchical-based paradigms, while they can excessively depend on extrinsic rewards and obtain unsatisfactory results, or lead to homogeneous behaviors with shared agent parameterization. In this paper, we propose a novel Dynamic Skill Learning (DSL) framework to enable more effective adaptation and collaboration in complex tasks. Specifically, DSL learns diverse skills without external rewards and then assigns skills to agents dynamically. DSL has two components:(\romannumeral 1) dynamic skill discovery, which fosters distinguishable and far-reaching skill learning by using Lipschitz constraints, and (\romannumeral 2) dynamic skill assignment, which leverages a policy controller to dynamically allocate the optimal skill combination for each agent based on their local observations. Empirical results demonstrate that DSL leads to better collaborative ability and significantly improves the performance on challenging benchmarks including StarCraft II and Google Research Football.