Towards Reliable LLM-based Robots Planning via Combined Uncertainty Estimation

Image credit: Chenjia Bai

Image credit: Chenjia BaiAbstract

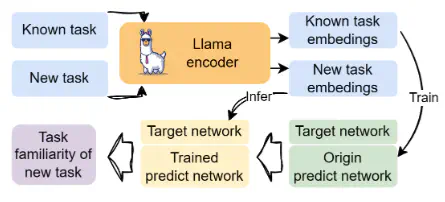

Large language models (LLMs) demonstrate advanced reasoning abilities, enabling robots to understand natural language instructions and generate high-level plans with appropriate grounding. However, LLM hallucinations present a significant challenge, often leading to overconfident or potentially misaligned or unsafe plans. To address this issue, we propose Combined Uncertainty Estimation for Reliable Embodied planning (CURE), which decomposes the uncertainty into epistemic (semantic-ambiguity/task-familiarity) and intrinsic parts for more reliable robot decision-making. Furthermore, epistemic uncertainty is subdivided into task clarity and task familiarity for more accurate evaluation. The overall uncertainty assessments are obtained using random network distillation and multi-layer perception regression heads driven by LLM features. We validated our approach in two distinct experimental settings: kitchen manipulation and tabletop rearrangement experiments. The results show that, compared to existing methods, our approach yields uncertainty estimates that are more closely aligned with the actual execution outcomes.